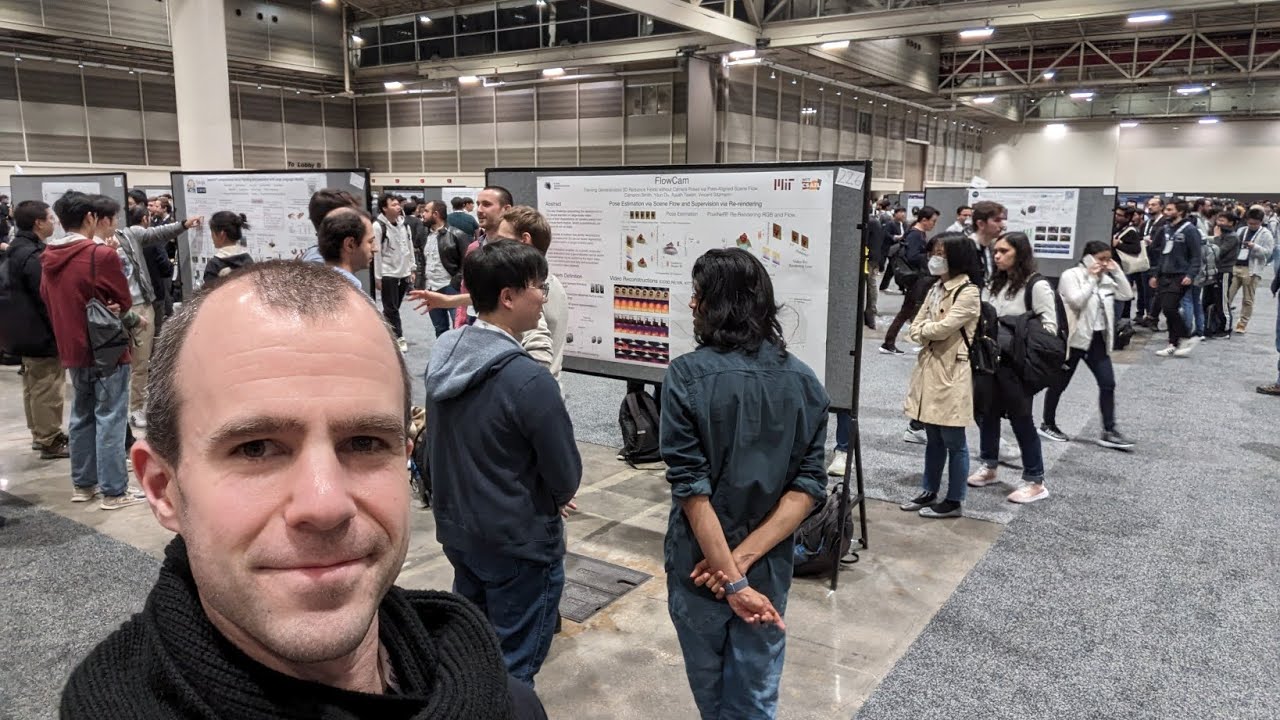

NeurIPS 2023 Poster Session 1 (Tuesday Evening)

Key Takeaways at a Glance

00:02Key takeaway: Use big posters with minimal text and lots of pictures for effective communication.01:24Key takeaway: Camouflaging adversarial patches can make them difficult to detect.04:59Key takeaway: Exemplar-free continual learning allows learning new classes without forgetting old ones.08:34Key takeaway: Mahalanobis distance can improve feature space comparison.12:26Key takeaway: Inference results can vary depending on hardware and computation.14:16Key takeaway: Be aware that inference in machine learning is not deterministic.14:21Key takeaway: Conservative estimation of value functions in reinforcement learning.15:03Analytical solution for estimating the value function.15:58Introduction of a new network for Q.17:02Bonus term for policy performance.

1. Key takeaway: Use big posters with minimal text and lots of pictures for effective communication.

🥈85 00:02

The best posters have one big sentence, a few pictures, and minimal text.

- Avoid using tiny text and math equations on posters.

- Big text and pictures attract more attention and make it easier to explain the content.

2. Key takeaway: Camouflaging adversarial patches can make them difficult to detect.

🥇92 01:24

Camouflaging adversarial patches within an image can make them difficult for humans and AI to detect.

- Camouflaging patches within an image can bypass AI detection.

- This approach modifies a small portion of the image while still breaking AI systems.

3. Key takeaway: Exemplar-free continual learning allows learning new classes without forgetting old ones.

🥈88 04:59

Exemplar-free continual learning enables learning new classes without forgetting previously learned ones.

- This approach is useful when you cannot access old training data.

- It allows adding new knowledge to a model without retraining with old and new data.

4. Key takeaway: Mahalanobis distance can improve feature space comparison.

🥇91 08:34

Using Mahalanobis distance instead of Euclidean distance can capture the distribution of data in the feature space.

- Mahalanobis distance considers the covariance matrix of each class.

- It enables better comparison of features and improves performance in continual learning.

5. Key takeaway: Inference results can vary depending on hardware and computation.

🥈87 12:26

Inference results can differ across different hardware platforms and even for the same GPU in multiple sessions.

- Different hardware platforms and GPUs can produce different results.

- Factors like floating-point operations and convolution algorithms can contribute to result variations.

6. Key takeaway: Be aware that inference in machine learning is not deterministic.

🥈86 14:16

Inference in machine learning is not always deterministic and can have variations.

- Inference results can be influenced by factors like hardware and computation.

- Understanding the non-deterministic nature of inference is important for accurate interpretation.

7. Key takeaway: Conservative estimation of value functions in reinforcement learning.

🥈83 14:21

This paper proposes a method for conservatively estimating value functions in reinforcement learning.

- The method involves imposing penalties to learn a conservative value function.

- Conservative estimation helps ensure stability and reliability in reinforcement learning.

8. Analytical solution for estimating the value function.

🥈85 15:03

An analytical solution can be used to estimate the value function of the data set, which provides a conservative estimate of the state values.

- The analytical solution is derived from the equation that maximizes the value function.

- This estimation method improves performance compared to other methods.

9. Introduction of a new network for Q.

🥈88 15:58

To improve performance, a new network for Q is introduced in addition to the existing network for V.

- Having both V and Q allows for the use of a classic method called Advantage Weighted Regression.

- The introduction of Q helps to enhance the performance of the algorithm.

10. Bonus term for policy performance.

🥇92 17:02

A bonus term is added to the algorithm to encourage exploration and improve policy performance.

- The bonus term is calculated based on the maximum Q value and serves as an incentive for better performance.

- This approach strikes a balance between conservative estimation and exploration.